30 SQL and Database Design Questions From Data Science Interviews At Top Tech Companies

When you hear “data scientist” you think of modeling, machine learning, and other hot buzzwords. While database design and SQL are not the most sexy parts of being a data scientist, they are very important topics to brush up on before your Data Science Interview.

So here's 20 real SQL questions, and 10 real Database Design questions asked by top companies like Google, Amazon, Facebook, Databricks, Yelp, and Robinhood during the Data Science Interview. We solved 8 of the problems below, and put the rest of the answers in our book, Ace the Data Science Interview (available via Amazon Prime!). You can also practice these same SQL Interview questions on DataLemur for FREE! For example, below is a real Facebook SQL Interview question:

Data Science Interview Topics

Overview of SQL Interview Questions

The first step of analytics for most workflows involves quick slicing and dicing of data in SQL. That's why being able to write basic queries efficiently is a very important skill. Although many may think that SQL simply involves SELECTs and JOINs, there are many other operators and details involved for powerful SQL workflows. For example, utilizing subqueries is important and allows you to manipulate subsets of data by which later operations can be performed, while window functions allow you to cut data without combining rows explicitly using a GROUP BY. The questions asked within SQL are usually quite practical to the company at hand - a company like Facebook might ask about various user or app analytics question, whereas a company like Amazon will ask about products and transactions.

For a list of the most important SQL statements to review before an interview check out my ultimate SQL interview guide for Data Analysts and Data Scientists.

You can also read company specific guides & blog posts:

- Amazon SQL Interview Questions & Guide

- Google SQL Interview Questions & Guide

- Apple SQL Interview Questions & Guide

Overview of Databases Design Questions

Although it isn’t explicitly necessary to know the inner workings of databases (which is typically more data engineering oriented), it helps to have a high level understanding of basic concepts in Databases and Systems. Databases refers not to specific ones but more so how they operate at a high level and what design decisions and trade-offs are made during construction and querying. “Systems” is a broad term but refers to any set of frameworks or tools by which analysis of large volumes of data relies on. For example, a common interview topic is the MapReduce framework which is heavily utilized at many companies for parallel processing of large datasets.

20 SQL Data Science Interview Questions

- [Robinhood - Easy] Assume you are given the below tables for trades and users. Write a query to list the top 3 cities which had the highest number of completed orders.

You can also practice this same exact Robinhood SQL Interview question on DataLemur if you want multiple hints and to be able to run your solution query!

- [Facebook - Easy] Assume you have the below events table on app analytics. Write a query to get the click-through rate per app in 2019.

Here's a similar version of this Facebook SQL question on DataLemur, along with multiple hints!

- [Uber - Easy] Assume you are given the below table for spending activity by product type. Write a query to calculate the cumulative spend for each product over time in chronological order.

- [Snapchat - Easy] Assume you have the below tables on sessions that users have, and a users table. Write a query to get the active user count of daily cohorts.

- [Facebook - Easy] Assume you are given the below tables on users and user posts. Write a query to get the distribution of the number of posts per user.

- [Amazon - Easy] Assume you are given the below table on purchases from users. Write a query to get the number of people that purchased at least one product on multiple days.

- [Opendoor - Easy] Assume you are given the below table on house prices from various zip codes that have been listed. Write a query to get the top 5 zip codes by market share of house prices for any zip code with at least 10000 houses.

- [Etsy - Easy] Assume you are given the below table on transactions from users for purchases. Write a query to get the list of customers where their earliest purchase was at least $50.

- [Disney - Easy] Assume you are given the below table on watch times (in minutes) for all users, where each user is based in a given city. Write a query to return all pairs of cities that have total watch times within 10000 minutes of one another.

- [Twitter - Easy] Assume you are given the below table on tweets by each user over a period of time. Calculate the 7-day rolling average of tweets by each user for every date.

Hint: Rolling averages mean you'll need a window function! In case you need a refresher, check out my 30 day learn SQL roadmap, which has my list of favorite FREE SQL resources and how I'd study them to go from SQL zero to SQL HERO!

- [Stitch Fix - Easy] Assume you are given the below table on transactions from users. Write a query to get the number of users and total products bought per latest transaction date where each user is bucketed into their latest transaction date.

- [Amazon - Easy] Assume you are given the below table on customer spend amounts on products in various categories. Calculate the top three most bought item within each category in 2020.

- [DoorDash - Easy] Assume you are given the below table on transactions on delivery locations and times for meals - a start location, an end location, and timestamp for a given meal_id. Certain locations are aggregation locations - where meals get sent to, and where meals then go to their final destination. Calculate the delivery time per meal to each final destination from a particular aggregation location, loc_id = 4.

- [Facebook - Medium] Assume you have the below tables on user actions. Write a query to get the active user retention by month.

- [Twitter - Medium] Assume you are given the below tables for the session activity of users. Write a query to assign ranks to users by the total session duration for the different session types they have had between a start date (2020-01-01) and an end date (2020-02-01).

- [Snapchat - Medium] Assume you are given the below tables on users and their time spent on sending and opening Snaps. Write a query to get the breakdown for each age breakdown of the percentage of time spent on sending versus opening snaps.

- [Google - Medium] Assume you are given the below table on sessions from users, with a given start and end time. A session is concurrent with another session if they overlap in the start and end times. Write a query to output the session that is concurrent with the largest number of other sessions.

- [Yelp - Medium] Assume you are given the below table on reviews from users. Define a top-rated place as a business whose reviews only consist of 4 or 5 stars. Write a query to get the number and percentage of businesses that are top-rated places.

- [Google - Medium] Assume you are given the below table of measurement values from a sensor for several days. Each measurement can happen several times in a given day. Write a query to output the sum of values for every odd measurement and the sum of values for every even measurement by date.

If you want hints, along with an in-depth solution, go solve this Google SQL Interview Question on DataLemur!

- [Etsy - Medium] Assume you are given the below table on transactions from various product search results from users on Etsy. For every given product keyword, there are multiple positions that being A/B tested, and user feedback is collected on the relevance of results (from 1-5). There are many displays for each position of every product, each of which is captured by a display_id. Define a highly relevant display as one whereby the corresponding relevance score is at least 4. Write a query to get all products having at least one position with > 80% highly relevant displays.

10 Database And Systems Design Interview Questions

- [MongoDB - Easy] For each of the ACID properties, give a one-sentence description of each property and why are these properties important?

- [VMWare - Easy] What are the three major steps of database design? Describe each step.

- [Microsoft - Easy] What are the requirements for a primary key?

- [DataStax - Easy] A B+ tree can contain a maximum of 5 pointers in a node. What is the minimum number of keys in leaves?

- [Databricks - Easy] Describe MapReduce and the operations involved.

- [Microsoft - Easy] Name one major similarity and difference between a WHERE clause and a HAVING clause in SQL (this is important to know if you want to solve this eBay SQL Interview Question)

- [Facebook - Easy] How does a trigger allow you to build business logic into a database?

- [DataStax - Easy] What are the six levels of database security and briefly explain what each one entails?

- [Databricks - Easy] Say you have a shuffle operator, whereby the input is a dataset and the output is simply a randomly ordered version of that dataset. Describe the algorithm steps in English.

- [Rubrik - Medium] Describe what a cache is and what a block is. Say you have a fixed amount of total data storage - what are the some trade-offs in varying block size?

7 Real Amazon SQL Interview Questions

For additional practice, I put together some insights on the Amazon SQL interview process for Data Analysts & Data Scientists, and curated 7 real Amazon SQL interview questions in the blog below:

SQL And Database Interview Solutions

Problem #4 Solution:

By definition, daily cohorts are active users from a particular day. First, we can use a subquery to get the sessions of new users by day using an inner join with users. This is to filter for only active users by a particular join date for the cohort. Then we can get a distinct count to return the active user count:

Problem #8 Solution:

Although we could use a self join on transaction_date = MIN(transaction_date) for each user, we can also use the RANK() window function to get the ordering of purchase by customer, and then use that subquery to filter on customers where the first purchase (rank one) is at least 50 dollars. Note that this requires the subquery to include spend as well

Problem #11 Solution:

First, we need to get the latest transaction date for each user, along with the number of products they have purchased. This can be done in a subquery where we GROUP BY user_id and take a COUNT(DISTINCT product_id) to get the number of products they have purchased, and a MAX(transaction_date) to get the latest transaction date (while casting to a date). Then, using this subquery, we can simply do an aggregation by the transaction date column in the previous subquery, while doing a COUNT() on the number of users, and a SUM() on the number of products:

Problem #16 Solution:

We can get the breakdown of total time spent on each activity by each user by filtering out for the activity_type and taking the sum of time spent. In doing this, we want to do an outer join with the age bucket to get the total time by age bucket for both activity types. This results in the below two subqueries. Then, we can use these two subqueries to sum them by joining on the appropriate age bucket and take the proportion for send time and the proportion for open time per age bucket:

Problem #18 Solution:

First, we need to get the places where the reviews are all 4 or 5 stars. We can do this using a HAVING clause, instead of a WHERE clause since the reviews need to all be 4 stars or above. For the HAVING condition, we can use a CASE statement that filters for 4 or 5 stars and then take a SUM over them. This can then be compared with the total row count of the particular business_id reviews to ensure that the count of top reviews matches with the total review count. With the relevant businesses, we can then do an outer join with the original table on business_id to get a COUNT of distinct business_id matches, and then the percentage by comparing the COUNT from the top places with the overall COUNT of business_id:

Problem #21 Solution:

ACID is a set of properties that ensures that even in the event of errors, power outages, and other unforeseen circumstances, a database will still hold up. It is an important framework for studying database systems.

A: Atomicity, meaning that an entire transaction happens as a whole or it doesn’t happen at all (no partial transactions). This prevents partial updates which can be problematic. Therefore, transactions cannot be “in progress” to any user.

C: Consistency, meaning that there are integrity constraints such that the database is consistent before and after a given transaction. Essentially, if I search for what is in Row 3 and then do so again without any modifications to the database (no deletes or inserts), I should get the same result. Any referential integrity is handled by appropriate checks for the primary and foreign keys.

I: Isolation, meaning that transactions happen in isolation and thus multiple transactions can occur independently without interference. This maintains concurrency properly.

D: Durability, meaning that once a transaction is complete, that information is now updated in the database even in the event of a system failure.

Problem #25 Solution:

MapReduce is a framework that is heavily used in processing large datasets across a large number of clusters (many machines). Within the groups of machines, there are worker nodes (which carry out the computations) and master nodes (which delegate the tasks for each worker node). The three steps are generally as follows.

- Map step: each worker node applies a specific map operations on input data (which the master node ensures will not be duplicated) and writes the output to a memory buffer.

- Data is re-distributed based on the output keys from the prior step’s map function, such that for any given key, it is located on the same worker node.

- Each worker node processes each key in parallel using specific reduce operations to get the output result.

Since the mapping and reducing functions can all be done in parallel, the amount of processing done is only limited by the amount of compute and data available. Note that there are edge cases if there are failures from worker nodes - in those cases, the desired operations can be re-scheduled by the master node.

Problem #27 Solution:

A trigger is like a CHECK condition, but every time there is an update to the database, the trigger condition will be checked to see if it has been violated. This allows you to implement some level of control and assurance that all your data entries meet a certain condition. For instance, a trigger that states that all ID values must be > 0 will ensure that you get no null values or negative values. When someone tries to enter such a value, the entry will not go through.

That being said, there are reasons why to not include business logic within database triggers. For example: 1) introduction of side effects which lead to bugs or other unintended consequences, or 2) performance problems in which case there is a cascading effect on triggers that leads to locking and other issues.

How To Get More Data Science Interview Questions

Want more like this? Buy our 301-page data science interview prep book on Amazon! And if you want an interactive SQL interview platform DataLemur has got you covered.

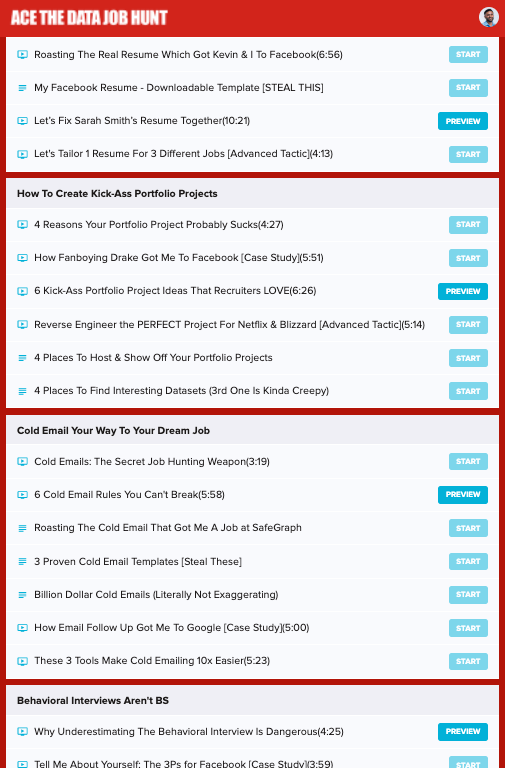

We also put out a free 9-day interview crash course, which shares a sneak-peek of each chapter from our book and my video course, Ace the Data Job Hunt!

We've also got 40 real probability & statistics interview questions asked by FANG & Wall Street, and 30 Machine Learning Interview Questions.